Driving Cache Replacement with ML-based LeCaR

LeCaR components

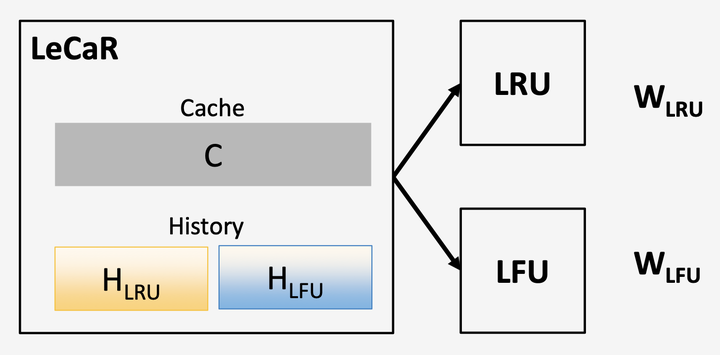

LeCaR componentsAbstract

Can machine learning (ML) be used to improve on existing cache replacement strategies? We propose a general framework called LeCaR that uses the ML technique of regret minimization to answer the question in the affirmative. Surprisingly, we show that the LeCaR framework outperforms A RC using only two fundamental eviction policies – LRU and LFU. We also show that the performance gap increases when the size of the available cache gets smaller relative to the size of the working set.

Type

Publication

Driving Cache Replacement with ML-based LeCaR

USENIX Workshop on Hot Topics in Storage and File Systems HotStorage'18